As an Amazon Associate I earn from qualifying purchases.

Today lets explore web scraping with Python.

What is web scraping?

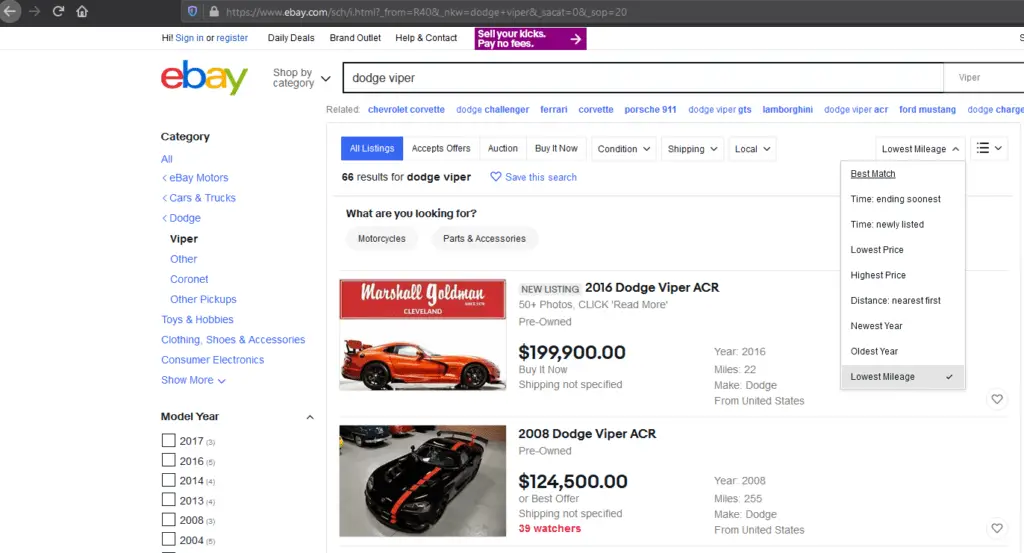

Web scraping is the act of programmatically extracting information from a website such as all the text on a page, a table on a page, lists, etc. It is a great tool for building and processing information without the need for an API on a website. Let’s say you want to monitor the average price of a car on eBay but it does not have an API to fetch car listings – web scraping will allow us to extract information like the car model, year, mileage, and price. In today’s tutorial, we will be using eBay and gathering data from it using BeautifulSoup.

Installing BeautifulSoup (bs4)

Since December 2020, BeautifulSoup for Python 2 is no longer supported. I will be using Python 3 for this tutorial which is supported and still actively developed. To install the library, run the following command from the terminal using pip – If pip is not installed, please see this tutorial on how to set it up:

pip install beautifulsoup4Getting started with BeautifulSoup

Import Libraries

Before you can get started with BeautifulSoup, you will need to import the request and bs4 modules. Use the code below to import both:

from bs4 import BeautifulSoup

import requestsCreate a request to the page you want to scrape data from. Since I am interested in tracking the prices of Dodge Vipers on eBay – let’s go to eBay, search for Dodge Vipers, and filter by Lowest Mileage:

Get the link generated from eBay, and make a new request in python using the URL assigning it to a variable named page:

page = requests.get('https://www.ebay.com/sch/i.html?_from=R40&_nkw=dodge+viper&_sacat=0&_sop=20')Next initialize BeautifulSoup using page.text and 'html_parser' as arguments:

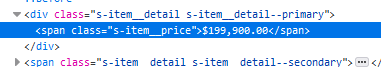

soup = BeautifulSoup(page.text, 'html.parser')Since we want the price only (as text and not HTML), you need to find the HTML element that contains the price. An easy way to do this is to right-click over the price in the browser and click “Inspect Element”. Once complete, you should now see the developer tools console with the HTML code that contains the price selected.

You can see above that price is in a span element and has the class s-item_price. Let’s see how you can extract this information in Python.

Extracting an HTML element from BeautifulSoup

To extract every price on the page from a span element you need to use the built-in method: find_all specifying the HTML element type and the class associated with it. Assign this to a variable named prices:

prices = soup.find_all('span', class_='s-item__price')If you iterate over each price in prices, you should be able to see each element:

for price in prices:

print(price)Output:

<span class="s-item__price">$199,900.00</span>

<span class="s-item__price">$124,500.00</span>

<span class="s-item__price">$189,000.00</span>

<span class="s-item__price">$59,900.00</span>

<span class="s-item__price">$181,995.00</span>

<span class="s-item__price">$57,900.00</span>

<span class="s-item__price">$50.00</span>

<span class="s-item__price">$57,900.00</span>

<span class="s-item__price">$59,995.00</span>

<span class="s-item__price">$169,990.00</span>

...

...

...As you can see, the prices have been printed out. Remove the surrounding HTML tags, using .text e.g.

for price in prices:

print(price.text)Output:

$199,900.00

$124,500.00

$189,000.00

$59,900.00

$181,995.00

$57,900.00

$50.00

$57,900.00

$59,995.00

$169,990.00

$50,000.00

$179,980.00

...

...Using BeautifulSoup on multiple pages

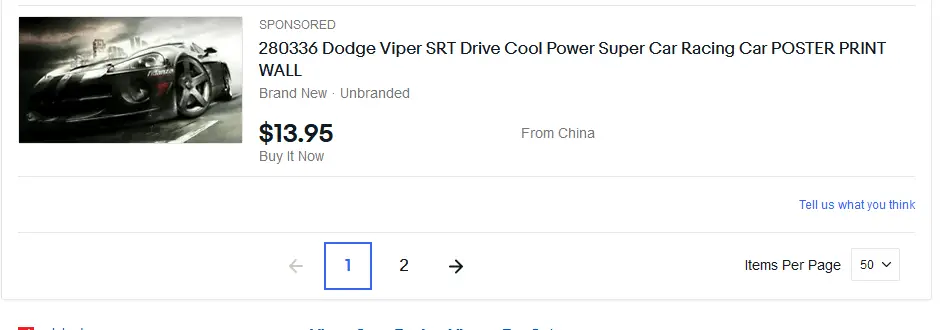

Up until now, we only have scraped one page of data. If you look at the eBay results for Dodge Vipers you will notice that there are 2 pages:

When you click on the second page, notice how the link changes.

First page:

https://www.ebay.com/sch/i.html?_from=R40&_nkw=dodge+viper&_sacat=0&_sop=20Second page:

https://www.ebay.com/sch/i.html?_from=R40&_sacat=0&_nkw=dodge+viper&_sop=20&_pgn=2You can see that when you click on the second page, &_pgn=2 gets appended to the URL. If you replace &_pgn=2 with &_pgn=1 you will be taken to the first page again. Using this logic, you can create a for loop in Python and scrape each page in a loop. Let’s see how this would look:

from bs4 import BeautifulSoup

import requests

# Create a for loop for the amount of pages you wish to query

for page in range(1, 3):

# Dynamically pass the page number to the URL

page = requests.get('https://www.ebay.com/sch/i.html?_from=R40&_sacat=0&_nkw=dodge+viper&_sop=20&_pgn=' + str(page))

# Initialize BeautifulSoup and find all spans with specified class

soup = BeautifulSoup(page.text, 'html.parser')

prices = soup.find_all('span', class_='s-item__price')

# Print the prices from each span element

for price in prices:

print(price.text)In this example, I have created a loop that iterates over the numbers 1 and 2 and dynamically replacing the pgn parameter with the relevant page number. This will load both pages and scrape the price, printing the results to the terminal.

Using BeautifulSoup and Pandas

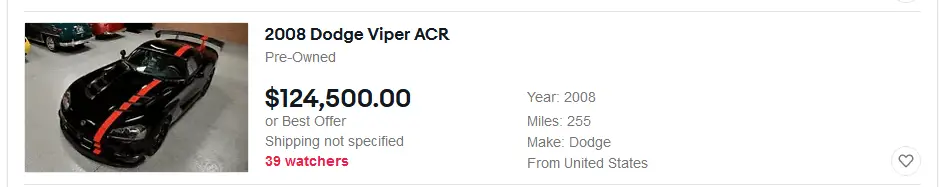

Since I am interested in buying a Dodge Viper, I am not just interested in the price. I will be interested in the year and mileage too. Let’s have a look at how you can extract this information, and convert it to a data frame using pandas.

To install Pandas, run the following command:

pip install pandasAnd import it into Python:

import pandas as pdFrom eBay, you can see that each car listing has the following fields: Price, Year, Miles, Make.

Using the method from above, inspect the element of each of the fields and use soup.find_all to extract the information from each span:

from bs4 import BeautifulSoup

import requests

import pandas as pd

# Create a for loop for the amount of pages you wish to query

for page in range(1, 3):

# Dynamically pass the page number to the URL

page = requests.get('https://www.ebay.com/sch/i.html?_from=R40&_sacat=0&_nkw=dodge+viper&_sop=20&_pgn=' + str(page))

# Initialize BeautifulSoup and find all spans with specified class

soup = BeautifulSoup(page.text, 'html.parser')

prices = soup.find_all('span', class_='s-item__price')

years = soup.find_all('span', class_='s-item__dynamic s-item__dynamicAttributes1')

mileage = soup.find_all('span', class_='s-item__dynamic s-item__dynamicAttributes2')Now, you have 3 lists: prices, years and mileage. To transform this into a more readable format, let’s create a data frame. Using list(zip... you can easily transform all 3 lists into columns of a data frame. Finally, print the contents of the data frame:

from bs4 import BeautifulSoup

import requests

import pandas as pd

# Create a for loop for the amount of pages you wish to query

for page in range(1, 3):

# Dynamically pass the page number to the URL

page = requests.get('https://www.ebay.com/sch/i.html?_from=R40&_sacat=0&_nkw=dodge+viper&_sop=20&_pgn=' + str(page))

# Initialize BeautifulSoup and find all spans with specified class

soup = BeautifulSoup(page.text, 'html.parser')

prices = soup.find_all('span', class_='s-item__price')

years = soup.find_all('span', class_='s-item__dynamic s-item__dynamicAttributes1')

mileage = soup.find_all('span', class_='s-item__dynamic s-item__dynamicAttributes2')

df = pd.DataFrame(list(zip(prices, years, mileage)))

print(df)Output:

0 [$46,790.00] [Year: 2004] [Miles: 23,928]

1 [$42,500.00] [Year: 1998] [Miles: 25,782]

2 [$74,900.00] [Year: 1997] [Miles: 27,600]

3 [$41,977.00] [Year: 1995] [Miles: 27,651]

4 [$500.00] [Year: 2009] [Miles: 27,930]

5 [$64,900.00] [Year: 1998] [Miles: 28,243]

6 [$35,888.00] [Year: 1995] [Miles: 33,814]

7 [$49,500.00] [Year: 2005] [Miles: 34,901]

8 [$44,995.00] [Year: 2002] [Miles: 37,287]

9 [$74,000.00] [Year: 2002] [Miles: 38,238]

10 [$40,000.00] [Year: 2003] [Miles: 45,000]

11 [$59,800.00] [Year: 2003] [Miles: 45,022]

12 [$46,490.00] [Year: 2002] [Miles: 48,891]

13 [$49,995.00] [Year: 1996] [Miles: 49,987]

14 [$70,100.00] [Year: 2013] [Miles: 53,000]

15 [$52,500.00] [Year: 2003] [Miles: 56,500]Now, you can see that you have a data frame with all the relevant information from eBay.

Navigating the HTML DOM in BeautifulSoup is easy. You can use the following the following set of functions to help aid web scraping:

Useful BeautifulSoup Functions

| Function | Description |

| find | Returns the first element with the specified tag. |

| find_all | Returns all elements with the specified tag. |

| find_all_next | Returns all elements after the specified tag. |

| find_all_previous | Returns all elements before the specified tag. |

| find_next | Returns the first element after the specified tag. |

| find_next_sibling | Returns the next sibling after the specified tag. |

| find_next_siblings | Returns all siblings after the specified tag. |

| find_parent | Returns the parent of the specified tag. |

| find_parents | Returns the parents of the specified tag. |

| find_previous | Returns the first element before the specified tag. |

| find_previous_sibling | Returns the sibling before the specified tag. |

| find_previous_siblings | Returns all siblings before the specified tag. |

Useful BeautifulSoup Accessors

| Accessor | Description |

| .contents | Returns a list of the element’s children. |

| .children | Returns an iterator/generator of the element’s children. |

| .parent / .parents | parent element(s) of the current element. |

| .next_sibling / .next_siblings | First sibling after the current element / All siblings after the current element. |

| .previous_sibling / .previous_siblings | First sibling before the current element / All siblings before the current element. |

| .next_element / .next_elements | First element after the current element / All elements after the current element. |

| .previous_element / .previous_elements | First element before the current element / All elements before the current element. |

Conclusion

This tutorial showed you the power of scraping data from a website primarily using the find_all method from BeautifulSoup. I showed you an example of scraping car prices from eBay with multiple page results and also listed the most commonly used functions and accessors for BeautifulSoup.

That’s all for Web scraping with Python! As always, if you have any questions or comments please feel free to post them below. Additionally, if you run into any issues please let me know.

If you’re interested in learning Python I highly recommend this book. In the first half of the book, you”ll learn basic programming concepts, such as variables, lists, classes, and loops, and practice writing clean code with exercises for each topic. In the second half, you”ll put your new knowledge into practice with three substantial projects: a Space Invaders-inspired arcade game, a set of data visualizations with Python”s handy libraries, and a simple web app you can deploy online. Get it here.

Thank you for an additional wonderful post. Where else could anybody get that type of data in this kind of a ideal way of writing? I have a presentation next week, and I am around the look for such data.